reference

https://hyunsooworld.tistory.com/entry/최대한-쉽게-설명한-논문리뷰-Attention-Is-All-You-NeedTransformer-논문

https://wandukong.tistory.com/19

https://aistudy9314.tistory.com/63

Abstract

this paper propose new simple network architecture, the Transformer,

-

based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.

-

This is superior in quality of machine translation

-

more parallelization and and take less time to train.

-

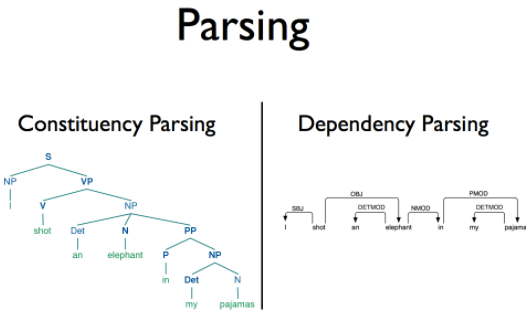

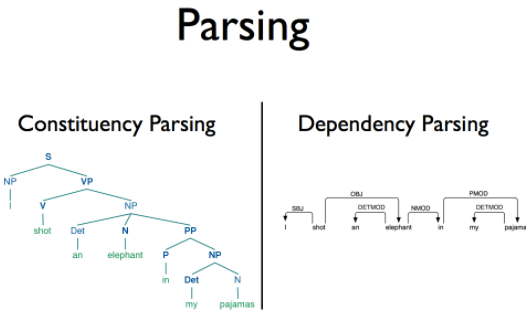

successfully constituency parsing(구문 분석)

- Constituency Parsing : 명사구나 동구와 같은 구단위의 구조를 파악

- Dependecy Parsing : 단어와 단어 간의 주종관계를 파악

Introduction

Recurrent neural networks(RNN)

has been the firm base of sequence modeling and transduction problems(language modeling and machine translation)

- Recurrent models : compuatation with input and output sequence

- t & h(t-1) ➡️ h(t)

- therefore, parallelization precluded ; memory constraints limit batching

- sol : factorization tricks and conditional computation improve computational efficiency but still constraint of sequential computation.

Attention mechanisms

- compelling sequence modeling and transduction models

- input, output 관계없이 dependency modeling